Unlike in most of our projects, we had a very specific user demographic, which enabled us to target our approach to best suit them.

While a voice user interface is far more natural to novice users than a touch screen or joystick, it’s common for misinterpretation or misunderstanding by the voice assistant to cause things to go wrong. Ultimately a voice assistant is listening for a command, followed by some detail in relation to that command. In theory, it shouldn’t matter how you phrase that command, so long as the trigger words are in there.

When it comes to novice users interacting with a voice assistant, it’s likely for their expectations to be higher than the capability of the technology. Generally, Alexa skills put the onus on the user to know what commands they should ask and how to phrase it. We wanted to ensure that the voice assistant did the leg work, allowing Elifar’s residents to communicate with ease.

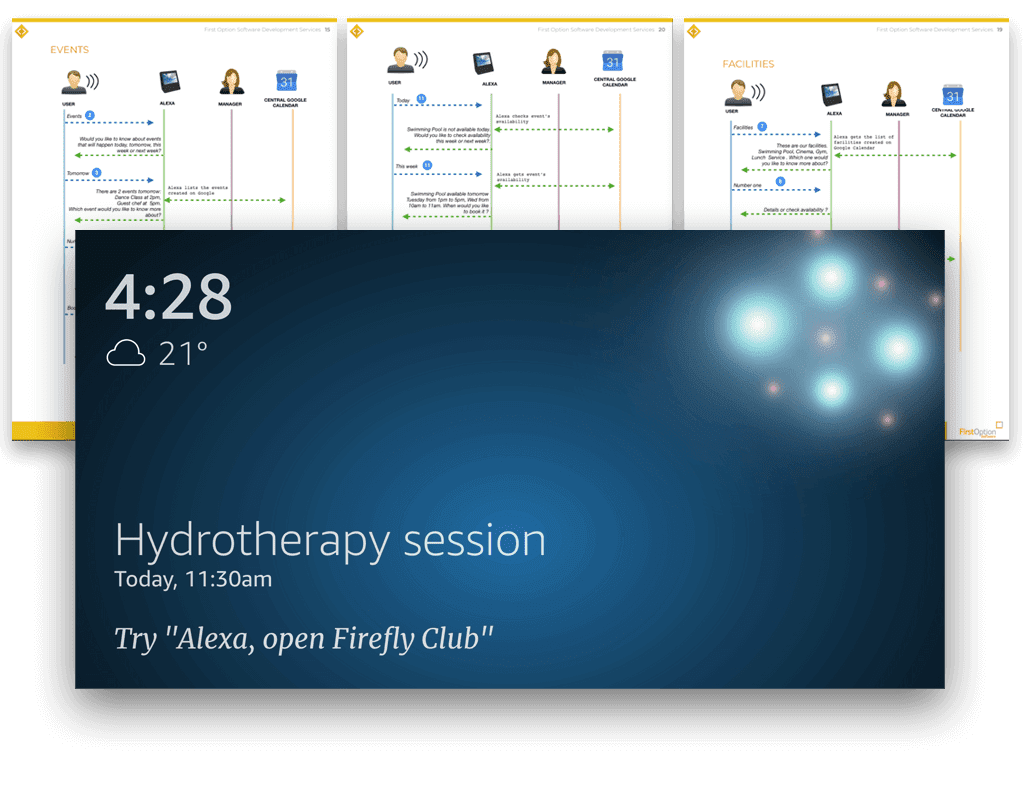

Firstly, we addressed the pace of the interaction. With the right triggers, voice assistants are capable of understanding and responding to complicated requests but with this, brings the potential for error. Instead, we designed the VUI (voice user interface) to build up a single request from multiple commands. This way, the voice assistant leads the user through the process, asking simple questions which require logical responses.

The result is an interaction which takes longer to complete but which has less likelihood of failure. We drew upon our experience of designing an app for the elderly, which taught us that consistent, repetitive interactions are beneficial to understanding and retention.